This article contains spoilers about Black Mirror, season four

Do we have free will, or are we controlled by a higher power? The capacity to act and determine one’s own actions in an increasingly technologised world is the most prominent theme in the latest season of Netflix’s Black Mirror. And the question writer Charlie Brooker addresses in his bleak sketches is as old as human consciousness itself.

Before the Industrial Revolution and the first sci-fi narrative (Mary Shelley’s Frankenstein, 200 years old this year) warning us of the dangers of replacing an inscrutable ancient god with a scientific one, people tried to determine two opposing but closely related things: how much agency they had, and whether they could rely on miraculous help from above in a time of difficulty. For free will is both a burden and a blessing. While we are imperfect, our vision limited by our perception, surely God is omniscient, omnipotent and wise? When we’re in trouble, he can save us and redeem our mistakes.

Isn’t technology a better god than the previous god? In the past, people asked deities about weather patterns, love, luck, and everything else. Their predictions worked, at best, 50%

Brooker’s answer to this question is a resounding “no”. Technology is certainly a more efficient god since it has turned magic into reality, but the human issue of free will is still a big part of our relationship with it. Seeing how clever, precise, omniscient and infallible this new god is, we have decided to entrust it with a range of mundane tasks we previously used to perform ourselves: counting, translating, finding our way, even expressing emotions and, of course, shopping.

A mirror to reality?

This is a tendency Brooker particularly despises. In his view, human laziness is what is going to destroy civilisation. Brooker predicts a world in which the scope of action for free will – our error-prone but nevertheless important decision-making capacity – becomes so narrow that we forget how it feels to be human, how to feel pain and to make mistakes. Thanks to smart phones, tablets, Alexa and Google, we have cognitively unloaded anything requiring an effort or possessing a margin for error to artificial intelligence (AI). All these functions will now be taken care of by the god of technology, by the All-Seeing Algorithm.

Even the more optimistic episodes of the latest (fourth) season – Hang the DJ and Black Museum – show that the human desire for an all-controlling, all-knowing supreme being does, indeed, result in exactly this kind of supreme being, but not in a good way.

In Hang the DJ, we are shown a world in which finding a mate no longer involves going through a series of disappointments and bouts of happiness. An app finds a person’s perfect match while their “copies”, trapped within what is called “the system”, make mistakes and suffer broken hearts instead of their “originals” who are waiting for the result in real life.

Although the episode’s finale is unexpectedly uplifting and positive, its overall message is not: as people, we have gone too far in shielding ourselves from any errors in the decision-making process. We wanted more perfection and less agency, and that’s exactly what we got.

This perfection, however, comes at a price. In Arkangel, a mother implants into her baby daughter a tracking device which monitors her well-being and detects her location. The device also allows the mother to see the world through her daughter’s eyes and to blur out any disturbing information. However, the daughter fights for her right to make mistakes and to handle the unpleasantness of the world. Keen to break up the unhealthy attachment aided by technology, the girl ends up taking drugs and having sex. Instead of the flawless child, the mother is faced with a rebellious teenager who ends up beating up and leaving her over-protective parent.

When good technology goes bad

Instead of being helpful and protective, technology becomes terrifying in Metalhead – a stark black-and-white vignette reminiscent of The Terminator – in which a sole female survivor is pursued by a robotic dog-like creature after a failed warehouse raid. The dog is autonomous, relentless and problem-solving. It does not make mistakes. Although the protagonist manages to outsmart the canine terminator on a number of occasions, in the end the technology is so powerful and ubiquitous that killing herself is her only escape.

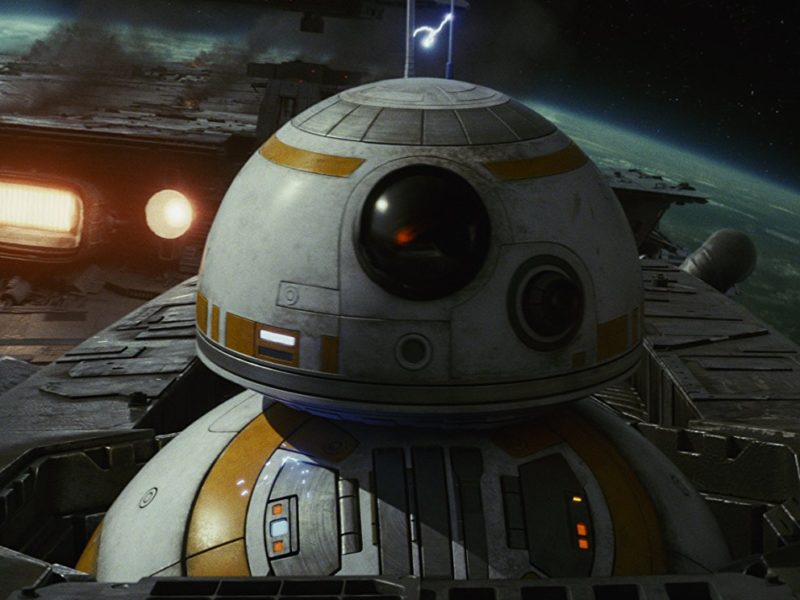

Those hoping to survive the onslaught of technological precision must look for rare flaws in it. This is what happens in USS Callister – probably the best episode in the series. Copies of real people trapped inside a private version of a space video game attempt to escape from it through a wormhole which temporarily appears, only to find themselves in the commercial version of the same game. Although they have more agency, they are still not entirely free.

And again, in Crocodile, the technology does not fail, but the human being does, try as she might. The recently invented memory-retrieving device prevents Mia from concealing the murders she has committed. Interestingly enough, Brooker makes us sympathise with the murderer as we witness her struggle to evade the relentless power of technology. Her agency is thwarted by the device, leaving her with no chance of escape.

In all episodes, Brooker shows the inevitable end of human agency as daily routines are taken over by artificial intelligence. Technology leaves only a small margin for human error. This is an excellent god. It has realised so many of our dreams, but is it the god we wanted?

Perhaps we should trust ourselves more and rely less on unfailing and dependable technology. Surrendering to it means losing that vital element that makes us human: the ability to make mistakes and to grow by learning from them.

Helena Bassil-Mozorow, Lecturer in Media and Journalism, Glasgow Caledonian University

This article was originally published on The Conversation. Read the original article.